Presented By: Industrial & Operations Engineering

899 Seminar Series: Holden. Lee, Johns Hopkins University

Provable learning and sampling from multimodal distributions with diffusion models

Presenter Bio

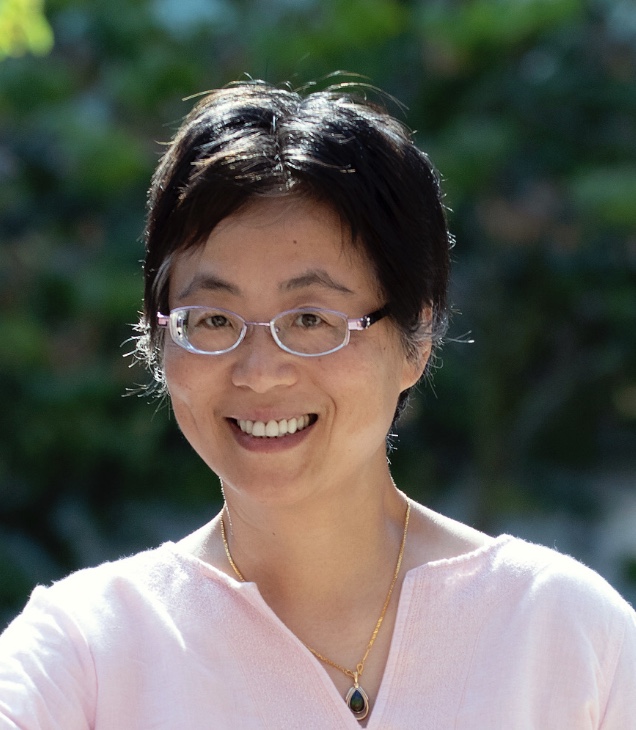

Holden Lee is an assistant professor of applied mathematics and statistics at Johns Hopkins University, working on theoretical machine learning and applied probability. His research focuses on mathematical foundations for sampling algorithms and generative modeling, with applications to language models. He was a co-organizer for the NeurIPS 2024 workshop on Creativity & Generative AI. He obtained his Ph.D. in mathematics from Princeton.

Abstract

Multimodal distributions such as mixture models pose significant challenges for both learning and sampling algorithms, but score-based and diffusion models have enjoyed widespread empirical success on these problems. How can we understand their success from a theoretical perspective?

For learning, we consider Gaussian mixtures and show diffusion models can learn a mixture of k isotropic Gaussians in R^n with quasi-polynomial time and sample complexity (exp(poly log((n+k)/epsilon))), under a minimum weight assumption. This gives a completely different, analytic proof of a result previously known only using a specialized algebraic approach, and moreover, it extends to give the first efficient algorithm for learning a non-parametric family of Gaussian convolutions of distribution supported on sets with a small covering number.

For sampling, we explain the surprising observation that despite the slow mixing of Langevin dynamics, it is possible to sample from multimodal distributions by learning only the vanilla score, as long as we use data-based initialization. We consider the more general problem of sampling using a Markov chain without global mixing given a small number of samples from the stationary measure, showing efficient sampling with a number of data samples almost linear in the number of modes; this also covers the case of Glauber dynamics with pseudolikelihood estimation.

Based on joint work with Khashayar Gatmiry and Jonathan Kelner (MIT), Frederic Koehler (UChicago), Thuy-Duong Vuong (UC Berkeley) on the papers https://arxiv.org/abs/2404.18869 and https://arxiv.org/abs/2411.09117.

This event is a part of our 899 seminar series.

Holden Lee is an assistant professor of applied mathematics and statistics at Johns Hopkins University, working on theoretical machine learning and applied probability. His research focuses on mathematical foundations for sampling algorithms and generative modeling, with applications to language models. He was a co-organizer for the NeurIPS 2024 workshop on Creativity & Generative AI. He obtained his Ph.D. in mathematics from Princeton.

Abstract

Multimodal distributions such as mixture models pose significant challenges for both learning and sampling algorithms, but score-based and diffusion models have enjoyed widespread empirical success on these problems. How can we understand their success from a theoretical perspective?

For learning, we consider Gaussian mixtures and show diffusion models can learn a mixture of k isotropic Gaussians in R^n with quasi-polynomial time and sample complexity (exp(poly log((n+k)/epsilon))), under a minimum weight assumption. This gives a completely different, analytic proof of a result previously known only using a specialized algebraic approach, and moreover, it extends to give the first efficient algorithm for learning a non-parametric family of Gaussian convolutions of distribution supported on sets with a small covering number.

For sampling, we explain the surprising observation that despite the slow mixing of Langevin dynamics, it is possible to sample from multimodal distributions by learning only the vanilla score, as long as we use data-based initialization. We consider the more general problem of sampling using a Markov chain without global mixing given a small number of samples from the stationary measure, showing efficient sampling with a number of data samples almost linear in the number of modes; this also covers the case of Glauber dynamics with pseudolikelihood estimation.

Based on joint work with Khashayar Gatmiry and Jonathan Kelner (MIT), Frederic Koehler (UChicago), Thuy-Duong Vuong (UC Berkeley) on the papers https://arxiv.org/abs/2404.18869 and https://arxiv.org/abs/2411.09117.

This event is a part of our 899 seminar series.