Presented By: Michigan Robotics

Simple and Scalable Edge AI – a Configurable, Dataflow Architecture with at Memory Computing

Wei Lu and Mohammed A. Zidan

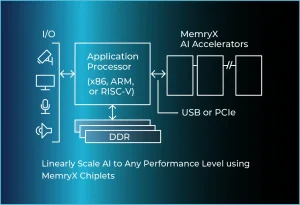

The ability to run AI efficiently at the edge will impact broad applications ranging from smart IoT, autonomous driving to robotics. However, as AI model sizes grow rapidly so do the cost of the hardware required to run them and the energy they consume, severely hindering the deployments of AI in edge devices. Careful analysis shows that the energy cost and throughput bottlenecks when running AI tasks are not caused by compute operations, but by constant data movements instead. Solving these problems requires new computing architectures that address the data movement problem and minimize its cost. By performing computing at memory and minimizing data movements through a dataflow architecture, MemryX’s AI accelerator chips fundamentally solves the throughput and energy challenges in Edge AI applications. In this talk, we will present the design principles behind MemryX’s architecture, use case examples, and features of the Software Development Kit (SDK) that make AI implementation easy and scalable for edge applications such as robotics.

Wei Lu is a Professor in the Electrical Engineering and Computer Science department at the University of Michigan, and co-founder of MemryX Inc. He received B.S. in physics from Tsinghua University, Beijing, China, in 1996, and Ph.D. in physics from Rice University, Houston, TX in 2003. From 2003 to 2005, he was a postdoctoral research fellow at Harvard University, Cambridge, MA. He joined the faculty of the University of Michigan in 2005. His research interest includes resistive-random access memory (RRAM), memristor-based logic circuits, neuromorphic computing systems, and low dimensional systems. To date Prof. Lu has published over 150 journal and conference articles with 34,000 citations and H-factor of 82. He is an IEEE Fellow.

Mohammed A. Zidan is currently leading the architecture team at MemryX Inc. Before joining MemryX, he was a postdoctoral fellow at the University of Michigan, Ann Arbor. Mohammed got his Ph.D. in electrical engineering from KAUST with a GPA of 4.0. He also earned his MSc and BSc in electronics and communications engineering from Cairo University and the IAET, respectively, where he was valedictorian in both. Mohammed has 10 granted US patents and more than 40 published papers and book chapters in his field. He also contributed to two IEEE standards. Mohammed received the IEEE CAS Society Pre-Doctoral Scholarship and the IEEE CICC Outstanding Invited Paper Awards.

Wei Lu is a Professor in the Electrical Engineering and Computer Science department at the University of Michigan, and co-founder of MemryX Inc. He received B.S. in physics from Tsinghua University, Beijing, China, in 1996, and Ph.D. in physics from Rice University, Houston, TX in 2003. From 2003 to 2005, he was a postdoctoral research fellow at Harvard University, Cambridge, MA. He joined the faculty of the University of Michigan in 2005. His research interest includes resistive-random access memory (RRAM), memristor-based logic circuits, neuromorphic computing systems, and low dimensional systems. To date Prof. Lu has published over 150 journal and conference articles with 34,000 citations and H-factor of 82. He is an IEEE Fellow.

Mohammed A. Zidan is currently leading the architecture team at MemryX Inc. Before joining MemryX, he was a postdoctoral fellow at the University of Michigan, Ann Arbor. Mohammed got his Ph.D. in electrical engineering from KAUST with a GPA of 4.0. He also earned his MSc and BSc in electronics and communications engineering from Cairo University and the IAET, respectively, where he was valedictorian in both. Mohammed has 10 granted US patents and more than 40 published papers and book chapters in his field. He also contributed to two IEEE standards. Mohammed received the IEEE CAS Society Pre-Doctoral Scholarship and the IEEE CICC Outstanding Invited Paper Awards.